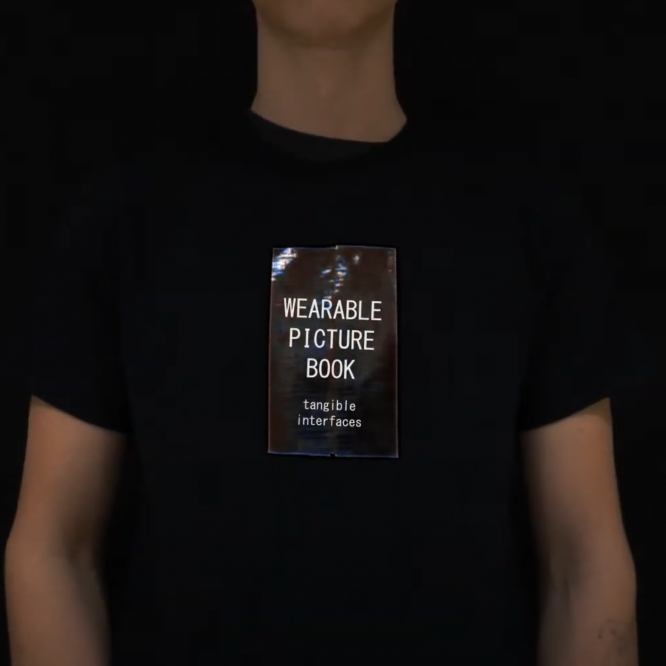

Wearable Picturebook

Experimenting with Context Aware Conversation Aids

Summary: Verbal conversation is the simplest form of transferring information between people without any digital augmentation. However, as society becomes more global with people coming from all sorts of backgrounds, it may be difficult to convey meaning without supplementary data channels. For example, if there happens to be a language barrier, it’s natural to try to use other ways to convey what is trying to be said, if that’s with hand gestures or images. Our approach is to have an on-body device that can display images that are related to the context of the sentence being spoken to further aid in the conversation and truly reinforce the saying, “you are what you say”. We utilize a Raspberry Pi 3 equipped with a lapel microphone to record audio while using Google Voice API for speech-to-text conversion. Our system then queries Google Images to find the most relevant photo related to a specified keyword from each sentence and displays the image on a wearable liquid crystal display (LCD).

System Design

Hardware

In order to display images that match spoken keywords, a device would need to be able to run the speech recognition code efficiently while recording the real-time microphone data. We began prototyping on a MacBook Pro because of its similarities to our intended Linux-based ARM device. After developing some working code, we began discussing different devices to run our program that would be small and compact. We initially thought to go with the Raspberry Pi Zero as it was more readily accessible and fit the form factor we envisioned. Over the course of development, we concluded that Pi Zero was not an ideal option for the required computational load of onboard speech processing. We understood that we had to parallelize our code but the Pi Zero's processor was only a 1 GHz single-core CPU. We opted for a Raspberry Pi 3 B+ which had a quad-core 1.4 GHz CPU, greatly increasing our ability to optimally multithread our system due to the additional cores. Since our project dealt with speech, we began prototyping with USB microphones as the Raspberry Pi does not have the capability for analog audio input. We first started with a tabletop USB microphone that we had readily available. That microphone had the directionality we wanted but it was bulky and we needed something more discreet for the prototype. As an alternative, we ordered a small lapel microphone and tested it with our code on the Raspberry Pi. Unfortunately, we found that the microphone had a considerable noise floor and lacked the directionality that the tabletop USB microphone had given us, leading to ambient noise pickup. After trial and error with several microphones, we eventually found one that had better directionality and a more ideal frequency response for speech-to-text translation.

In addition to the Raspberry Pi and the microphone, we also needed a screen to display the images. We initially wanted to use a flexible screen, but due to their prohibitive cost and lack of robustness, we ruled them out in favor of a 7" rigid LCD screen that was stitched to a shirt. Our final prototype consisted of the Raspberry Pi 3 B+, a webcam mic, a 7" LCD screen, and a portable battery pack.

Software

Real-time Speech Recognition

Early on in the brainstorming phase, we decided that there were going to be two software components that would be crucial in building this project, speech recognition and word-to-image query. We needed to design a program that would take speech as input from the microphone and convert it to text. We used a Python library called Speech Recognition coupled with Google Speech Recognition API. The first draft of the program was sluggish with a significant delay when attempting to recognize speech. We tried other speech recognition APIs including PocketSphinx, which could be run offline unlike the Google API, which needed to make calls to the Google servers. After getting a working version of the code using PocketSphinx, we quickly realized that PocketSphinx is ideal for keyword spotting but given that it requires pre-training for a pre-specified amount of words, it proved to be computationally intensive when expanding to a large word set. We eventually switched back to using the Google API and turned to other methods to make the program run more optimally. We rewrote our original code with multithreading calls, better utilizing the Raspberry Pi's quad-core CPU and reducing much of our real-time delays. We put the microphone, recognizer, and image lookup software components on separate threads so that the neither would have to wait for the other to start. This sped up the program a little, but there was still significant latency. We then looked into the documentation and realized that a function we were using from the speech recognition library waited for absolute silence before sending it to the Google servers to get the speech transcribed. We got around this by using a 4-second-wide circular buffer that took in 1 second audio samples and sent them in shorter intervals to the server. This allowed us to transcribe the speech faster, but the tradeoff was that it compromised some of the accuracy of the transcription. Google's Speech Recognition library uses sentence semantics to better predict the spoken word text translation so passing in incomplete sentences often leads to inaccuracies in the transcription so further work is warranted on optimizing the circular buffer size.

Image Query

The second software component of our system was a program that would take in the speech transcription input, pick out keywords, and display relevant images on the connected LCD. The resulting program searched for a keyword from a list of predefined words that we stored in a Python dictionary, searched and downloaded the most relevant image from Google, and then displayed it full screen on the worn display. There was some latency with this software component since it was making calls to an external server and saving the images locally. We iterated through a few versions, one of which used a pre-downloaded image set, another utilized the built-in functionality of the library to live search for any keyword instead of those included in our dictionary, and the last used calls to the server to download the images only from our dictionary. Although pre-downloading a dictionary of images is the most ideal, we will likely not pursue that option since that limits our system to a static set. In addition, our implementation of querying Google Images leads to a dynamic set of images where an image associated to a word can change depending on Google's PageRank algorithm. This leads to positive consequences since the images are dynamic and show temporal changes on the keywords relevancy in society.

Gallery

Scenario 1: Bedroom

More Immersive Storytime

Scenario 2: Office

A Better Brainstorming Experience

Scenario 3: Party

Conversational Aid for Loud Environments

Wearable Picture Book

For the video demo, we taped a green rectangle onto a shirt and projected simulated content.